Content Pipelines and the Death of Innocent Scrolling

Why you need to remember what you watched 10 swipes ago.

Social media has become an environment where you must be a skeptical participant, unless you wish to be taken advantage of by the social engineers developing the platforms. At this point, it is well known that social media tracks each of our actions. Every like, share, or comment is used to inform the platform of subsequent content to show us, its users. As a consequence, everybody’s experience on a social media application is different from that of anyone else.

Traditional algorithmic curation algorithms record your engagement with other accounts and use this to recommend more of that account’s content to you. If you like a post by Mark Cuban or Jimmy Kimmel, the platform will show you more posts by Mark Cuban and Jimmy Kimmel, regardless of whether you choose to follow them. In the last decade or so, curation algorithms expanded to not only show you content from those you engaged with, but the content that users with similar engagement habits as you engage with. The social media platform is attempting to segment you into an arbitrary social group and show you content that others in that social group show interest in.

The problem with this model is that people tend to have pretty diverse interests. While we may share interest in one topic, we actually don’t have commonality with others. However, the curation algorithm doesn’t recognize these nuanced differences and still propagates the browsing interests of others to us. This model of algorithmic curation gives rise to “content pipelines”. Content pipelines are the consequence of attempting to segment you with other similar users and show content that those other users interacted with. For example, if you’re interested in video games, curation algorithms may show you video game art, music and news because other accounts have overlapping interests. But your interest in video games also overlaps with the terminally online culture warriors in the gamercel community, so your content may slip into showing you toxic culture slop from this tribe of users.

If we choose to engage with this peripheral content from users with overlapping interests the curation algorithms will feed more to us. Whether the engagement is positive or negative or a mild like or an intense comment does not matter. Engagement is engagement. Anything short of blocking the account will bring you closer into the content further down the pipeline.

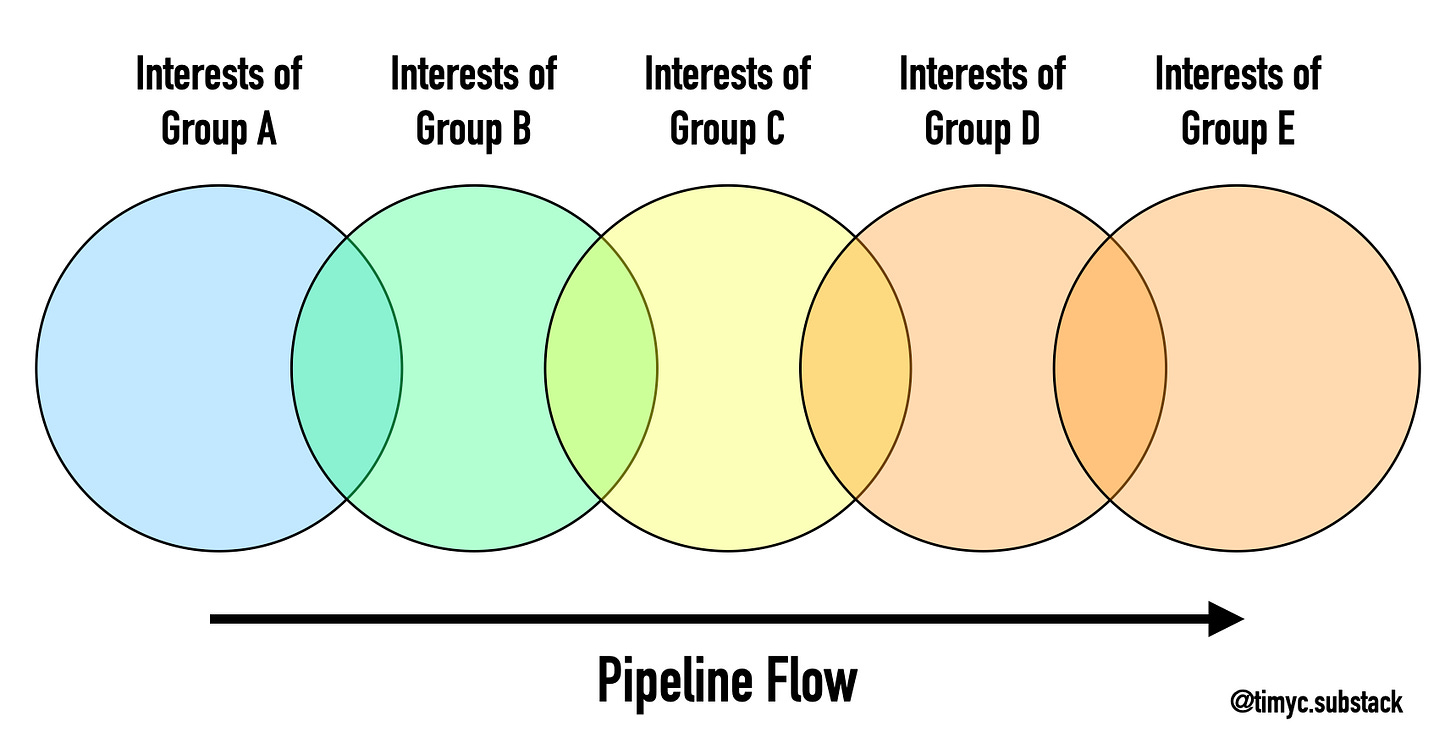

Such a model of content curation can result in a chained series of seemingly unrelated interests being provided to your account. I like to imagine this as a series of linked venn diagrams (see below). Each circle represents the unique interests of a group, but there is overlap with other interest groups. If one chooses to engage with the overlapped region, they will get sucked into the unique interests of that group.

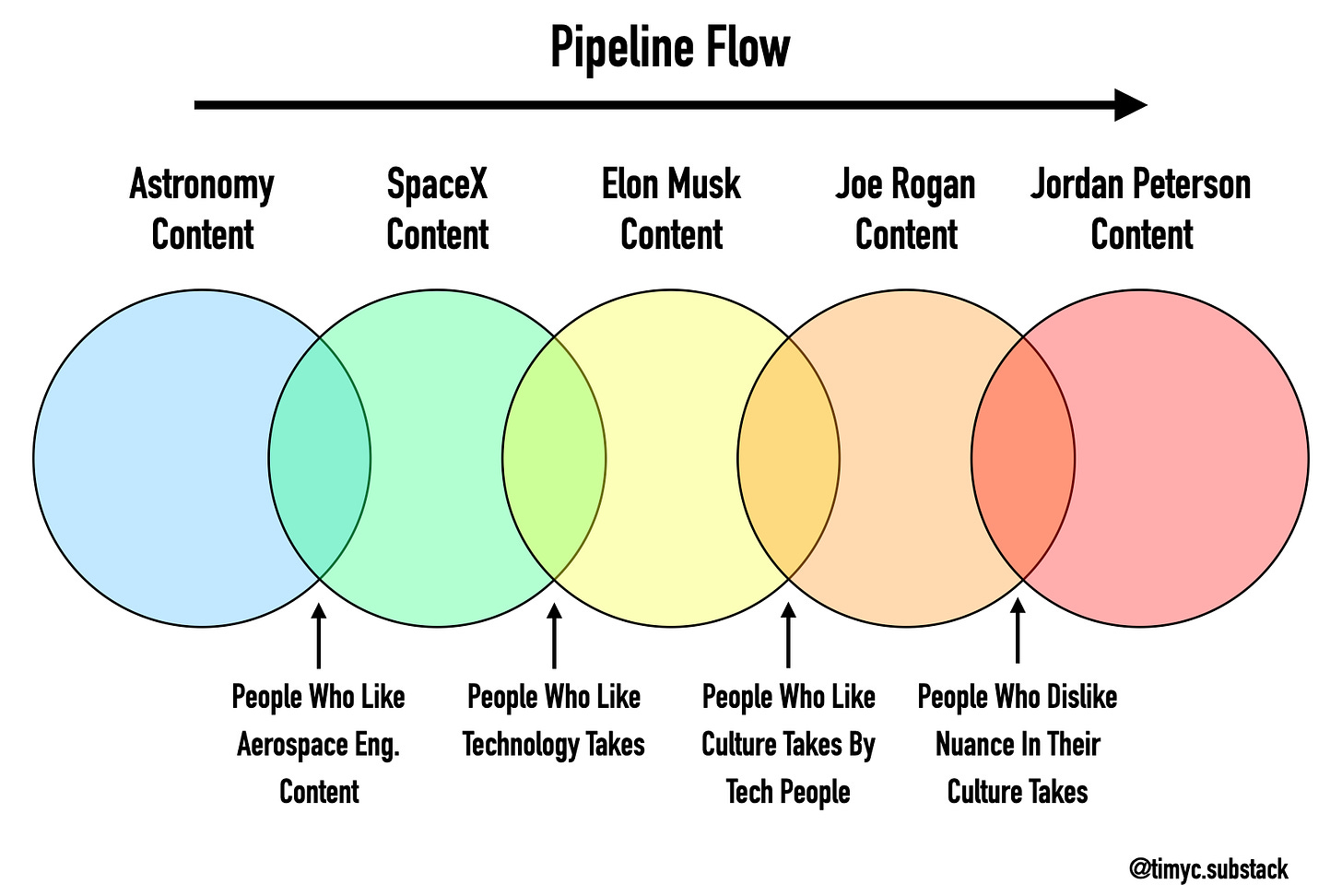

In my early 20’s - when I was more active in exploring social media platforms - I had to work through avoiding the toxicity that these content pipelines introduced to my social media feeds. At the time I worked at SpaceX and appropriately my social media algorithms fed me astronomy content. This included pod cast clips from Niel DeGrass Tyson, old quips from Carl Sagan, and scenes from Kerbal Space Program gamers. From this starting point, youtube shorts, instagram reels, and tiktok safely assumed that because I enjoyed space content, I would also engage with SpaceX content. So the platforms would start to show me clips of the rocket launches I was already inundated with at work, but hey it was a good ego stroke at the time.

But the content pipeline continues to develop. From SpaceX it naturally started sending my Elon Musk interview clips, which at the time I had an indifferent opinion of. His clipped quotes seemed normal enough, but also I didn’t care much for seeing more of the guy than I already had to. But clips of Elon Musk turned into snippets of Joe Rogan interviewing Elon Musk. Those snippets turned into just Joe Rogan clips, which subsequently turned into Joe Rogan interviewing Jordan Peterson clips, which finally turned into just Jordan Peterson clips. I’m sure I could have let the content pipeline continue, but once I started seeing Joe Rogan interview people unrelated to space I was swiping away too quickly and the algorithm likely picked up what I was putting down.

This is a typical example of a content pipeline, specifically an astronomy to a modern conservative pipeline. Unfortunately, to recognize this pipeline you need to be self-aware of your own user journey on the social media platform. On TikTok, you need to be able to answer the question “what video did I watch 10 swipes ago”? If you’re fully absolving mental control from your brain to the algorithmic platform, you will not critically assess the content entering your newsfeed. This self-awareness is antithetical to the design of TikTok, which aims to destroy any form of long term attention.

Once I became aware of these content-pipelines, I became more critical with how I interact with social media platforms. I now actively avoid interacting with certain content and swipe away rapidly on short form content that I know will steer my curation algorithm in a direction I dislike.

For instance, I occasionally see posts on the “male loneliness epidemic” that I agree with. This is a topic that I personally care about, but from a more wholesome perspective than one that the man-o-sphere approaches it with. Yet, I recognize that the man-o-sphere has an outsized influence on this conversation and choosing to engage with such posts could steer my content-pipeline towards less scrupulous opinions on the topic; opinions that attempt to leverage resentment and cast blame on external factors. As a result, I choose not to like, comment, or share posts on this topic knowing that it could potentially damage the productivity of my newsfeed.

On Youtube Shorts or Instagram Reels (sorry, I refuse to touch TikTok with a 10 foot pole), I can feel my algorithm tugging itself towards politically polarizing content. However, I mostly use these short-form content platforms to watch videos related to my hobbies. Still the app will occasionally throw in a video about “the current thing”.

Part of me does find it unfortunate that we have let misinformation spread so rabidly across the internet that we now need to be considerably more critical of how we choose to engage with it. Furthermore, as an amateur content creator, I know how much of a pain in the ass it can feel to gain recognition for the work you put out. I’m not a fan of making it even harder for content creators, especially those that are trying to change the dialogue around an abused topic.

Yet, being more critical of the media we consume is not new from a historical perspective. Perhaps it was easier in earlier times since you engaged with news and media simply by paying for it. If you disliked the content, you just didn’t buy it. But now media and content is algorithmically shoved down our throats on almost every social networking platform. Which content we receive is now determined in more subtle ways than exchanging a nickel for a paper from the newspaper boy. In an era when media literacy already seems so low, social media regulation is nearly non-existent, and market incentives exist to drive misinformation, it would do the general populace good to be more critical of how they choose to engage with content on their platforms.

*I cannot actually guarantee how productive your newsfeed will be. That’s up the dev’s at Substack.